Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

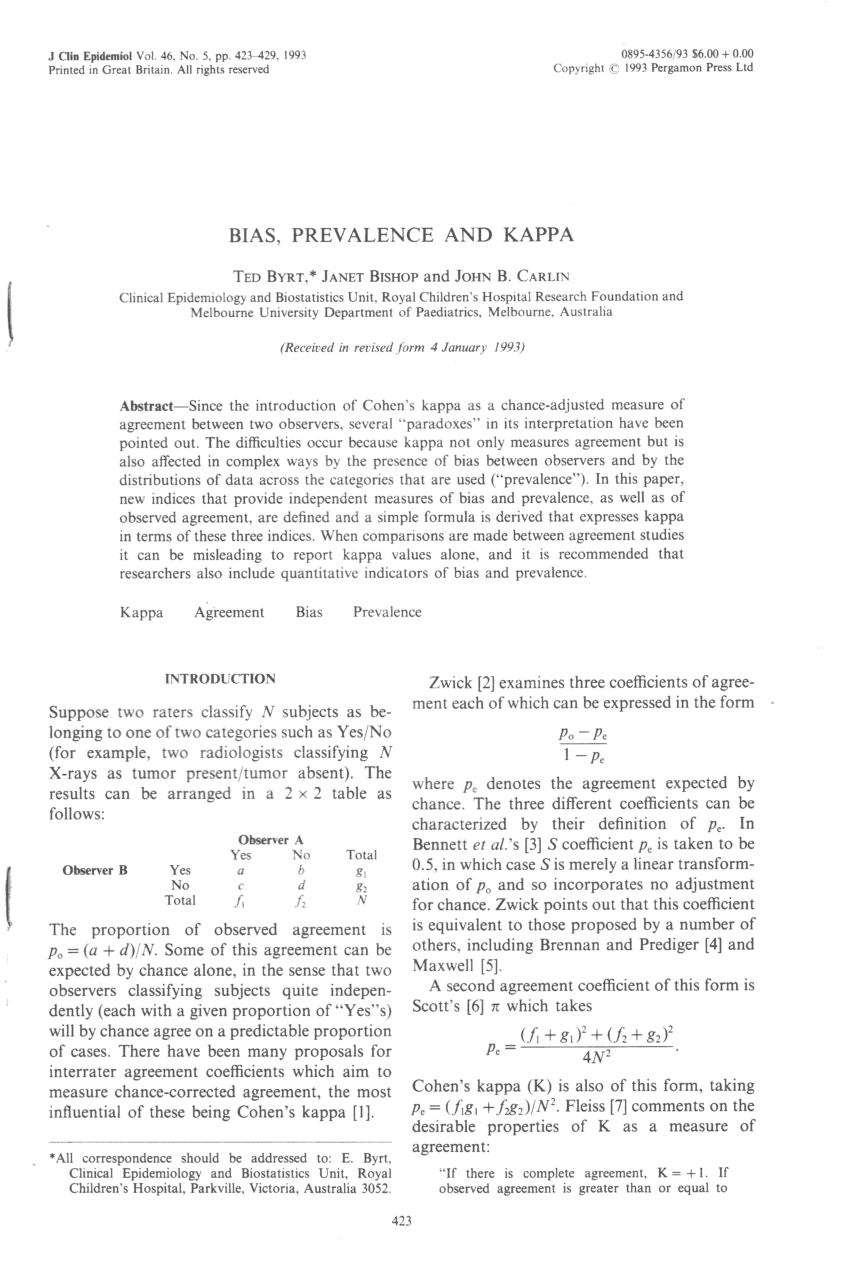

Including Omission Mistakes in the Calculation of Cohen's Kappa and an Analysis of the Coefficient's Paradox Features

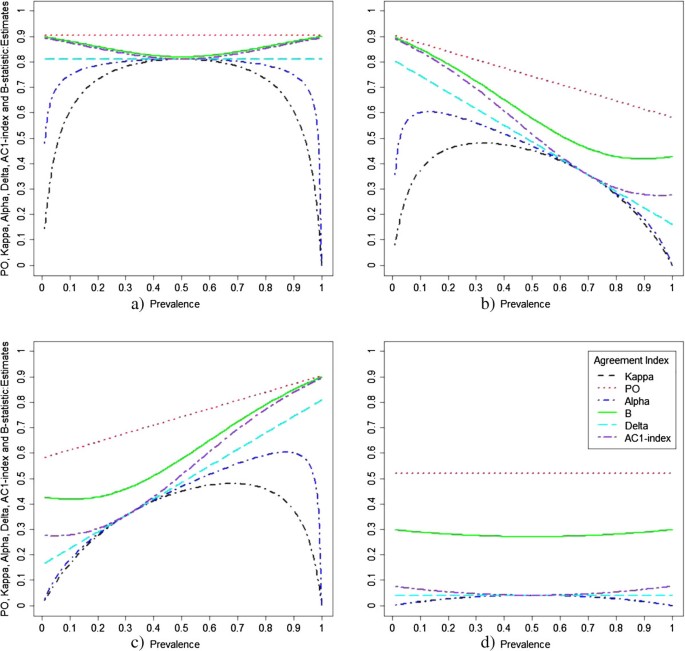

Observer agreement paradoxes in 2x2 tables: comparison of agreement measures | BMC Medical Research Methodology | Full Text

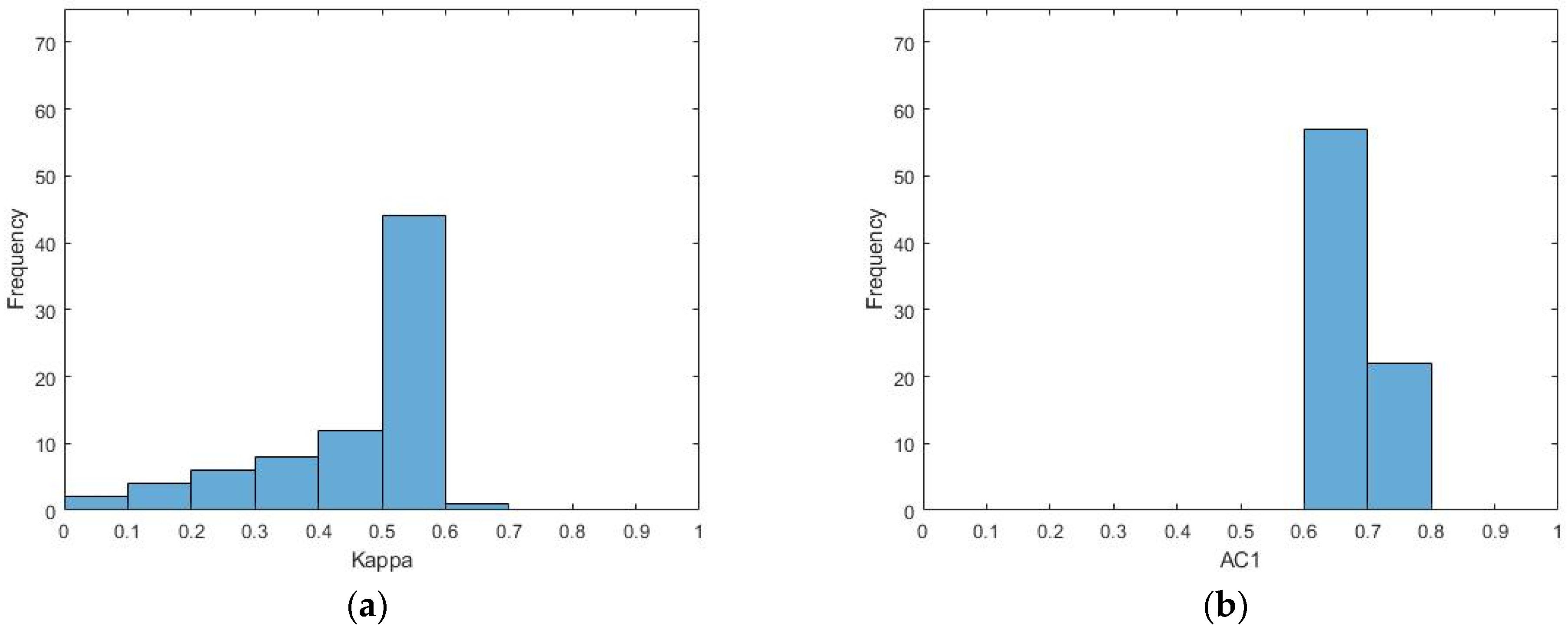

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

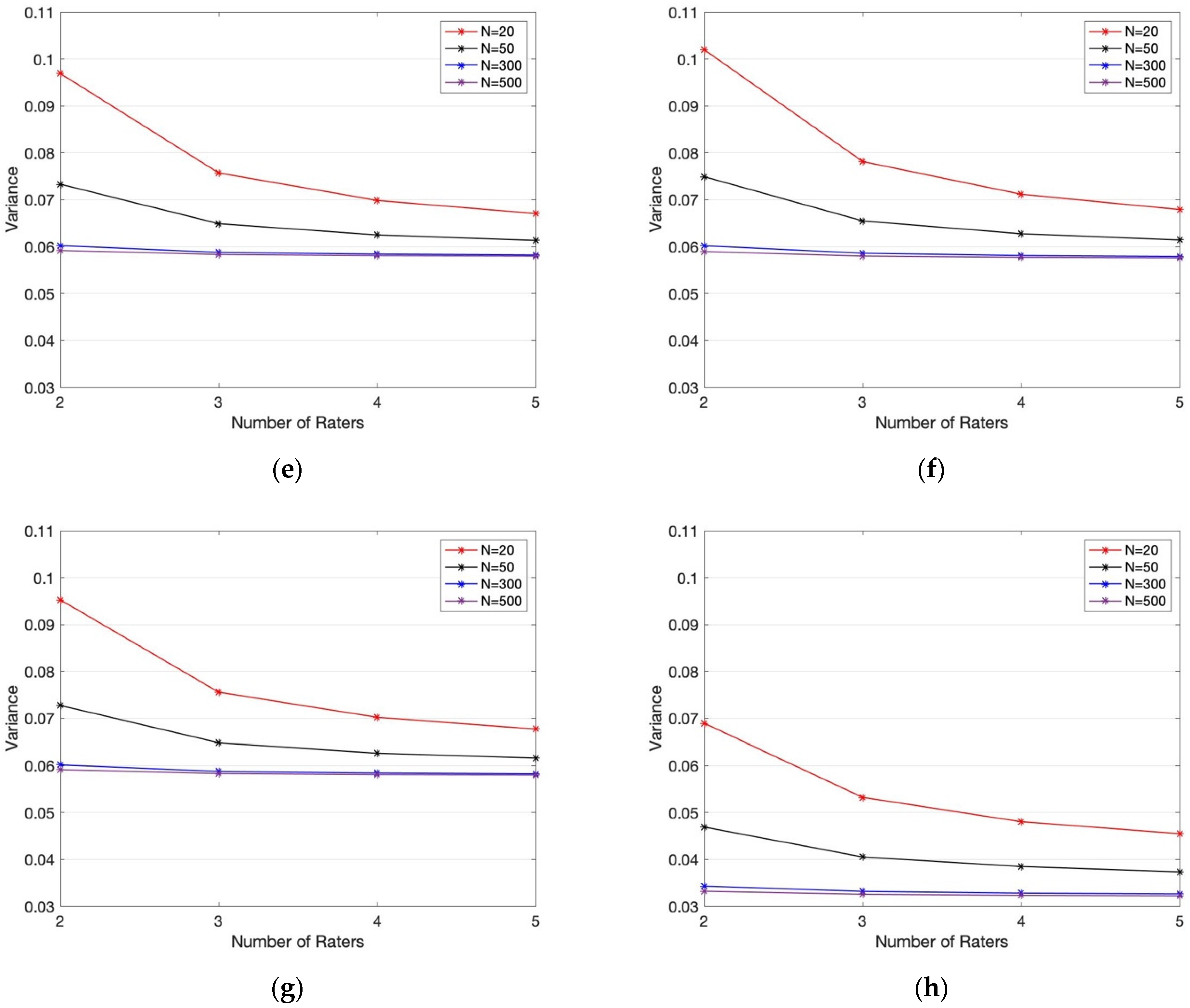

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

![PDF] High Agreement and High Prevalence: The Paradox of Cohen's Kappa | Semantic Scholar PDF] High Agreement and High Prevalence: The Paradox of Cohen's Kappa | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/c348c2b3ab5510a4c0576e93747355a1d63a7347/2-Table1-1.png)

![PDF] High Agreement and High Prevalence: The Paradox of Cohen's Kappa | Semantic Scholar PDF] High Agreement and High Prevalence: The Paradox of Cohen's Kappa | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/c348c2b3ab5510a4c0576e93747355a1d63a7347/4-Table2-1.png)